OpenCv中的KNN

下面这段代码是 OpenCV-Python-Tutorial-中文版.pdf (P261)中的实现,

当前系列所有demo下载地址:

https://github.com/GaoRenBao/OpenCv4-Demo

https://gitee.com/fuckgrb/OpenCv4-Demo

不同编程语言对应的OpenCv版本以及开发环境信息如下:

语言 | OpenCv版本 | IDE |

C# | OpenCvSharp4.4.8.0.20230708 | Visual Studio 2022 |

C++ | OpenCv-4.5.5-vc14_vc15 | Visual Studio 2022 |

Python | OpenCv-Python (4.6.0.66) | PyCharm Community Edition 2022.1.3 |

KNN的详细介绍请参考PDF文档,这里只提供测试代码!!

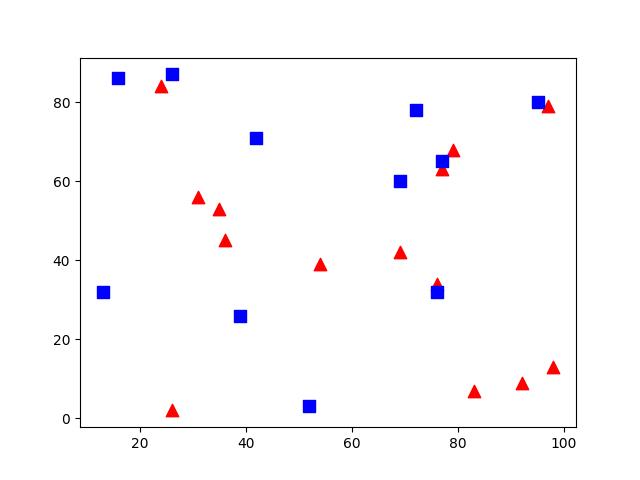

进行KNN处理后的图:(总感觉好像不正常。。。)

C#版本代码如下:

【略】

C++版本代码如下:

【略】

Python版本代码如下

http://www.bogotobogo.com/python/OpenCV_Python/python_opencv3_Machine_Learning_Classification_K-nearest_neighbors_k-NN.php

import cv2

import numpy as np

import matplotlib.pyplot as plt

# Feature set containing (x,y) values of 25 known/training data

trainData = np.random.randint(0, 100, (25, 2)).astype(np.float32)

# Labels each one either Red or Blue with numbers 0 and 1

responses = np.random.randint(0, 2, (25, 1)).astype(np.float32)

# Take Red families and plot them

red = trainData[responses.ravel() == 0]

plt.scatter(red[:, 0], red[:, 1], 80, 'r', '^')

# Take Blue families and plot them

blue = trainData[responses.ravel() == 1]

plt.scatter(blue[:, 0], blue[:, 1], 80, 'b', 's')

plt.show()

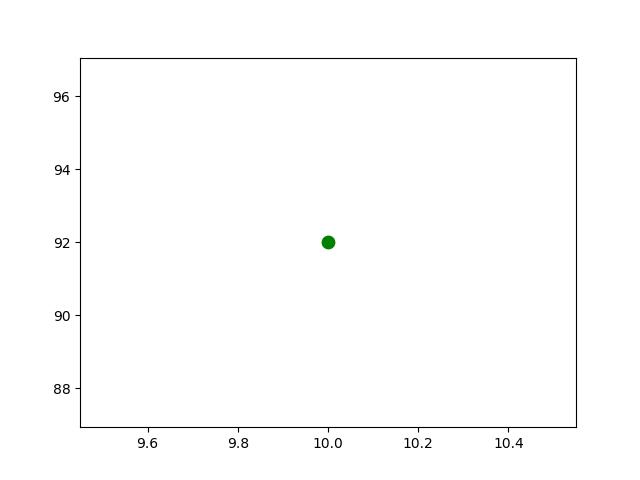

# 测试数据被标记为绿色

# # 回值包括

# 1. 由 kNN算法计算得到的测 数据的类别标志0或1 。

# 如果你想使用最近邻算法 只需 将 k 置为 1 k 就是最近邻的数目。

# 2. k 个最近邻居的类别标志。

# 3. 每个最近邻居到测 数据的 离。

newcomer = np.random.randint(0, 100, (1, 2)).astype(np.float32)

plt.scatter(newcomer[:, 0], newcomer[:, 1], 80, 'g', 'o')

knn = cv2.ml.KNearest_create()

knn.train(trainData, cv2.ml.ROW_SAMPLE, responses)

ret, results, neighbours, dist = knn.findNearest(newcomer, 3)

print("result: ", results, "\n")

print("neighbours: ", neighbours, "\n")

print("distance: ", dist)

plt.show()

# 如果我们有大 的数据 测 可以直接传入一个数组。对应的结果 同样也是数组

# 10 new comers

newcomers = np.random.randint(0, 100, (10, 2)).astype(np.float32)

ret, results, neighbours, dist = knn.findNearest(newcomer, 3)

# The results also will contain 10 labels.

CSDN上的代码:

来源:https://blog.csdn.net/qq_41895747/article/details/87926205

import numpy as np

import cv2

import matplotlib.pyplot as plt

# 设置绘图属性

plt.style.use('ggplot')

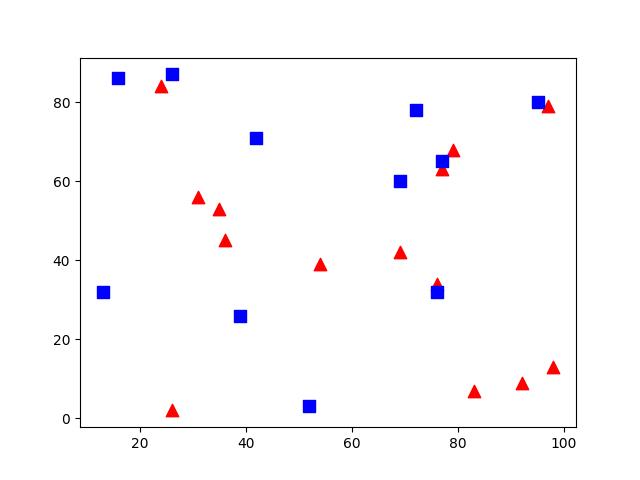

# 生成训练数据

# 输入:每个点的个数和每个数据点的特征数

def generate_data(num_samples, num_features):

data_size = (num_samples, num_features)

data = np.random.randint(0, 100, size=data_size)

labels_size = (num_samples, 1)

labels = np.random.randint(0, 2, size=labels_size)

return data.astype(np.float32), labels # 确保将数据转换成np.float32

train_data, labels = generate_data(11, 2)

# 分红和蓝色分别作图

def plot_data(all_blue, all_red):

plt.scatter(all_blue[:, 0], all_blue[:, 1], c='b', marker='s', s=180)

plt.scatter(all_red[:, 0], all_red[:, 1], c='r', marker='^', s=180)

plt.xlabel('x coordinate (feature 1)')

plt.ylabel('y coordinate (feature 2)')

print("train_data:", train_data) # 观察数据集

# 平面化数据

bule = train_data[labels.ravel() == 0]

red = train_data[labels.ravel() == 1]

plot_data(bule, red)

plt.show()

# 训练分类器

knn = cv2.ml.KNearest_create() # 实例化

knn.train(train_data, cv2.ml.ROW_SAMPLE, labels)

# 利用findNearest发现新的数据点

newcomer, _ = generate_data(1, 2)

plot_data(bule, red)

plt.plot(newcomer[0, 0], newcomer[0, 1], 'go', markersize=14)

plt.show()