对极几何

下面这段代码是 OpenCV-Python-Tutorial-中文版.pdf (P254)中的实现,

当前系列所有demo下载地址:

https://github.com/GaoRenBao/OpenCv4-Demo

https://gitee.com/fuckgrb/OpenCv4-Demo

不同编程语言对应的OpenCv版本以及开发环境信息如下:

语言 | OpenCv版本 | IDE |

C# | OpenCvSharp4.4.8.0.20230708 | Visual Studio 2022 |

C++ | OpenCv-4.5.5-vc14_vc15 | Visual Studio 2022 |

Python | OpenCv-Python (4.6.0.66) | PyCharm Community Edition 2022.1.3 |

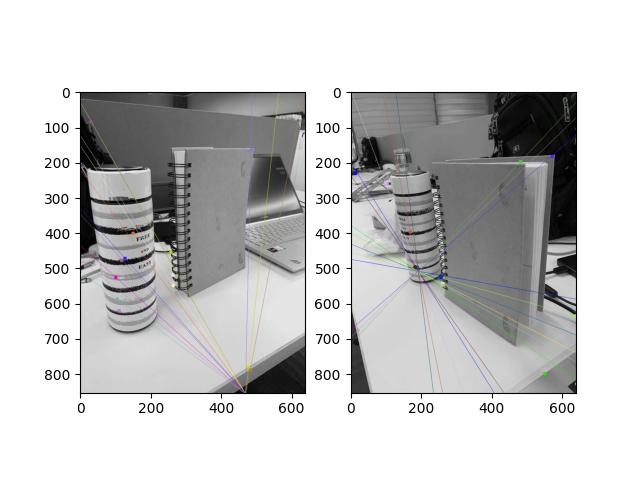

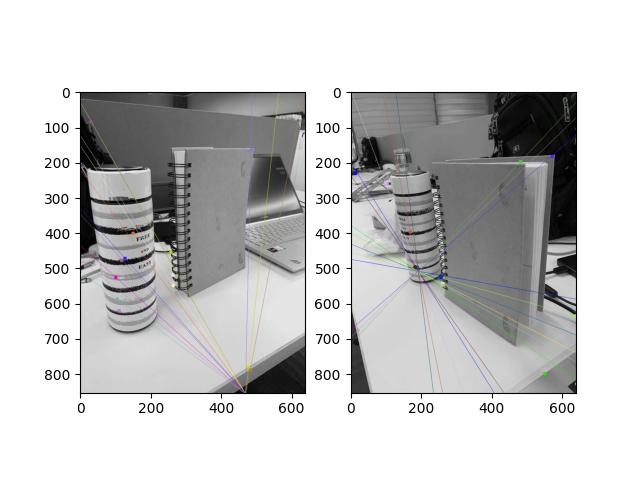

博主这里没找到官方测试用的原图,博主自己拍了两张测试。

测试效果如下:

C#版本代码如下:

C++版本代码如下:

#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

Mat img1 = cv::imread("../images/myleft.jpg", 0);

Mat img2 = cv::imread("../images/myright.jpg", 0);

Ptr<SIFT> sift = SIFT::create();

Mat des1, des2;

vector<KeyPoint> kp1, kp2;

sift->detectAndCompute(img1, cv::Mat(), kp1, des1);

sift->detectAndCompute(img2, cv::Mat(), kp2, des2);

Ptr<flann::IndexParams> index_params = makePtr<flann::KDTreeIndexParams>(5);

index_params->setAlgorithm(0);

Ptr<flann::SearchParams> search_params = makePtr<flann::SearchParams>(50);

FlannBasedMatcher flann = FlannBasedMatcher(index_params, search_params);

vector<vector<DMatch>> matches;

flann.knnMatch(des1, des2, matches, 2);

vector<Point2f> pts1;

vector<Point2f> pts2;

for (int i = 0; i < matches.size(); i++)

{

if (matches[i][0].distance < 0.7 * matches[i][1].distance)

{

pts2.push_back(kp2[matches[i][0].trainIdx].pt);

pts1.push_back(kp1[matches[i][0].queryIdx].pt);

}

}

// 匹配点列表,用它来计算【基础矩阵】

Mat mask;

cv::Mat F = cv::findFundamentalMat(pts1, pts2, mask, FM_LMEDS);

std::vector<cv::Vec<float, 3>> epilines1, epilines2;

cv::computeCorrespondEpilines(pts1, 2, F, epilines1);

cv::computeCorrespondEpilines(pts2, 1, F, epilines2);

cv::RNG& rng = theRNG();

for (int i = 0; i < pts1.size(); i++)

{

Scalar color = Scalar(rng(256), rng(256), rng(256));

line(img1, Point(0, -epilines1[i][2] / epilines1[i][1]),

Point(img1.cols, -(epilines1[i][2] + epilines1[i][0] * img1.cols) / epilines1[i][1]), color);

}

for (int i = 0; i < pts2.size(); i++)

{

Scalar color = Scalar(rng(256), rng(256), rng(256));

line(img2, Point(0, -epilines2[i][2] / epilines2[i][1]),

Point(img2.cols, -(epilines2[i][2] + epilines2[i][0] * img2.cols) / epilines2[i][1]), color);

}

cv::imshow("img1", img1);

cv::imshow("img2", img2);

cv::waitKey(0);

}

Python版本代码如下:

参考资料:

https://docs.opencv.org/4.5.5/da/de9/tutorial_py_epipolar_geometry.html

https://blog.csdn.net/zhoujinwang/article/details/128349114

import cv2

import numpy as np

from matplotlib import pyplot as plt

# 找到极线

def drawlines(img1, img2, lines, pts1, pts2):

''' img1 - image on which we draw the epilines for the points in img2

lines - corresponding epilines '''

r, c = img1.shape

img1 = cv2.cvtColor(img1, cv2.COLOR_GRAY2BGR)

img2 = cv2.cvtColor(img2, cv2.COLOR_GRAY2BGR)

for r, pt1, pt2 in zip(lines, pts1, pts2):

color = tuple(np.random.randint(0, 255, 3).tolist())

x0, y0 = map(int, [0, -r[2] / r[1]])

x1, y1 = map(int, [c, -(r[2] + r[0] * c) / r[1]])

img1 = cv2.line(img1, (x0, y0), (x1, y1), color, 1)

img1 = cv2.circle(img1, tuple(pt1), 5, color, -1)

img2 = cv2.circle(img2, tuple(pt2), 5, color, -1)

return img1, img2

img1 = cv2.imread('../images/myleft.jpg', 0) # queryimage # left image

img2 = cv2.imread('../images/myright.jpg', 0) # trainimage # right image

sift = cv2.SIFT_create()

# sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

pts1 = []

pts2 = []

# ratio test as per Lowe's paper

for i, (m, n) in enumerate(matches):

if m.distance < 0.7 * n.distance:

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

# 匹配点列表,用它来计算【基础矩阵】

pts1 = np.int32(pts1)

pts2 = np.int32(pts2)

F, mask = cv2.findFundamentalMat(pts1, pts2, cv2.FM_LMEDS)

# We select only inlier points

pts1 = pts1[mask.ravel() == 1]

pts2 = pts2[mask.ravel() == 1]

# 从两幅图像中计算并绘制极线

# Find epilines corresponding to points in right image (second image) and

# drawing its lines on left image

lines1 = cv2.computeCorrespondEpilines(pts2.reshape(-1, 1, 2), 2, F)

lines1 = lines1.reshape(-1, 3)

img5, img6 = drawlines(img1, img2, lines1, pts1, pts2)

# Find epilines corresponding to points in left image (first image) and

# drawing its lines on right image

lines2 = cv2.computeCorrespondEpilines(pts1.reshape(-1, 1, 2), 1, F)

lines2 = lines2.reshape(-1, 3)

img3, img4 = drawlines(img2, img1, lines2, pts2, pts1)

plt.subplot(121), plt.imshow(img5)

plt.subplot(122), plt.imshow(img3)

plt.show()

# 从上图可以看出所有的极线都汇聚以图像外的一点 这个点就是极点。

# 为了得到更好的结果 我们应 使用分辨率比较高的图像和 non-planar 点