寻找已知物体(二)

视频讲解如下:

在本章节中给大家演示如何利用SURF和SIFT来进行关键物体的查找,并将查找到的物体标记出来。主要函数:SURF和SIFT。

本章节的C++同样还是采用毛星云的代码框架,不过做了些许修改。

当前系列所有demo下载地址:

https://github.com/GaoRenBao/OpenCv4-Demo

不同编程语言对应的OpenCv版本以及开发环境信息如下:

语言 | OpenCv版本 | IDE |

C# | OpenCvSharp4.4.8.0.20230708 | Visual Studio 2022 |

C++ | OpenCv-4.5.5-vc14_vc15 | Visual Studio 2022 |

Python | OpenCv-Python (4.6.0.66) | PyCharm Community Edition 2022.1.3 |

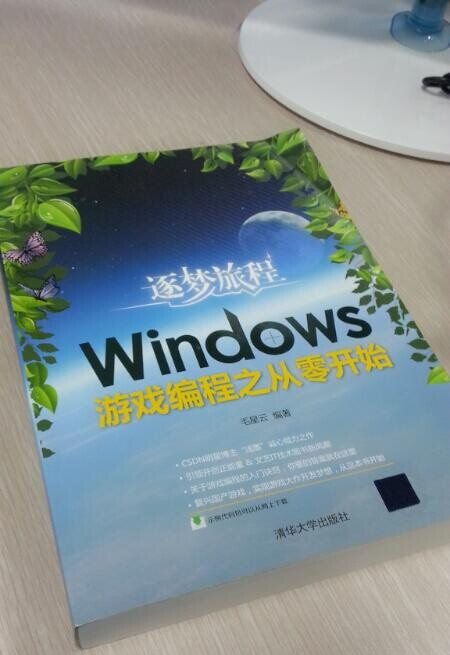

首先呢,我们需要准备两张测试图片, 这里不推荐使用毛星云的那两张测试图片哈,毛星云的测试图片效果不是很好,所以需要对图片做些裁剪,准备的图片如下,一张是需要查找的图片,另一张是目标图片。

考虑到只有C#版本有SURF和SIFT两个查找,博主这里直接演示C#的效果。

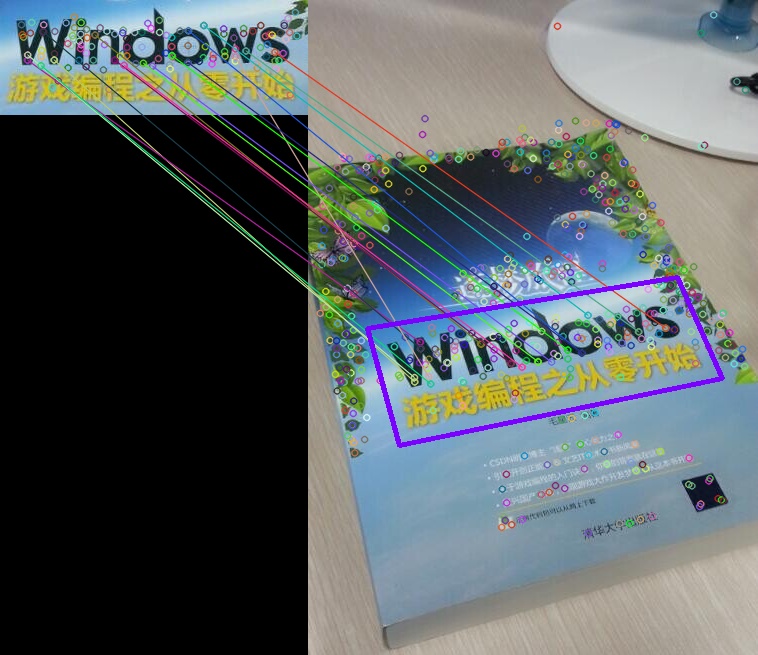

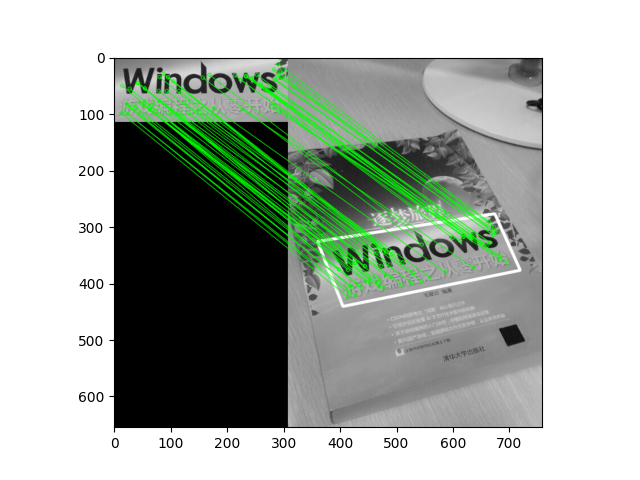

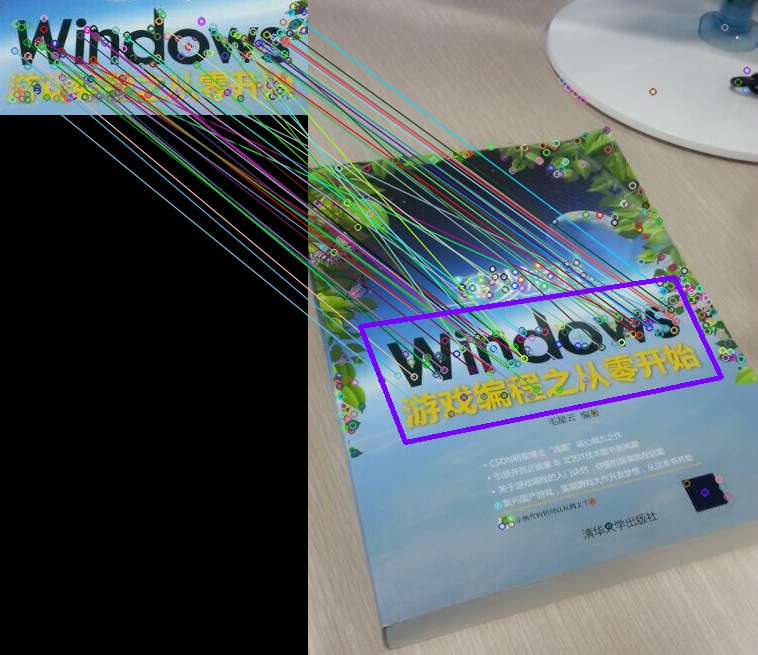

首先是SURF的效果:

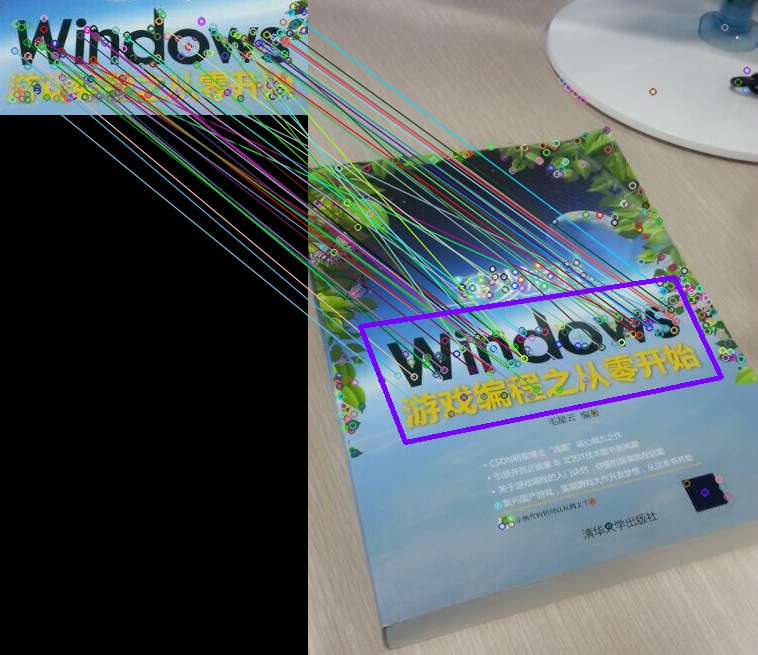

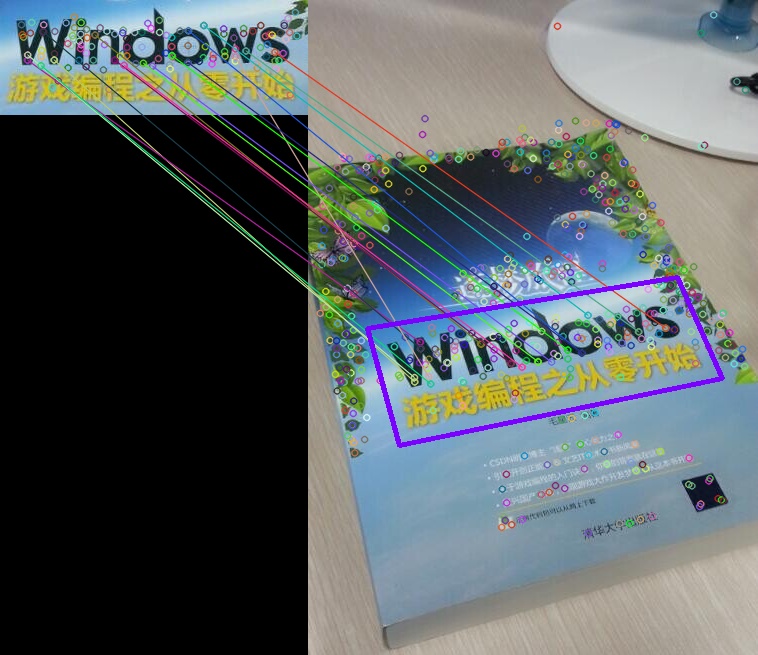

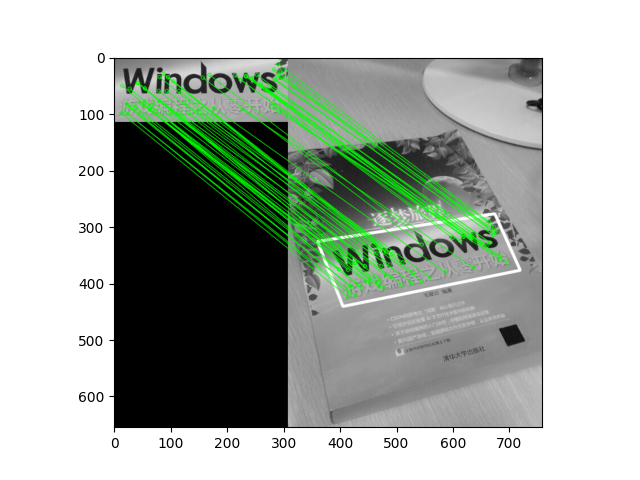

然后使SIFT的效果:

在目前的基础上,博主做了个利用视频进行检测的程序,效果如下。感兴趣的童鞋可以自己尝试修改测试一下。目前该效果不提供源码。

两个版本效果相差好像也不是很大。不过SIFT形成的点集好像更多。

C#版本代码如下:

using OpenCvSharp;

using System.Collections.Generic;

namespace demo

{

internal class Program

{

static void Main(string[] args)

{

SURF();

SIFT();

Cv2.WaitKey();

}

public static Point2d Point2fToPoint2d(Point2f pf)

{

return new Point2d(((int)pf.X), ((int)pf.Y));

}

/// <summary>

/// SURF

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private static void SURF()

{

// 载入源图片并显示

Mat srcImage1 = Cv2.ImRead("../../../images/book_box.jpg");

Mat srcImage2 = Cv2.ImRead("../../../images/book2.jpg");

// 定义一个特征检测类对象

KeyPoint[] keypoints_object, keypoints_scene;

Mat descriptors_object = new Mat();

Mat descriptors_scene = new Mat();

var MySurf = OpenCvSharp.XFeatures2D.SURF.Create(400);

// 方法1:计算描述符(特征向量),将Detect和Compute操作分开

keypoints_object = MySurf.Detect(srcImage1);

keypoints_scene = MySurf.Detect(srcImage2);

MySurf.Compute(srcImage1, ref keypoints_object, descriptors_object);

MySurf.Compute(srcImage2, ref keypoints_scene, descriptors_scene);

// 方法2:计算描述符(特征向量),将Detect和Compute操作合并

//MySurf.DetectAndCompute(srcImage1, null, out keypoints_object, descriptors_object);

//MySurf.DetectAndCompute(srcImage2, null, out keypoints_scene, descriptors_scene);

// 创建基于FLANN的描述符匹配对象

FlannBasedMatcher matcher = new FlannBasedMatcher();

DMatch[] matches = matcher.Match(descriptors_object, descriptors_scene);

double max_dist = 0; double min_dist = 100;//最小距离和最大距离

// 计算出关键点之间距离的最大值和最小值

for (int i = 0; i < descriptors_object.Rows; i++)

{

double dist = matches[i].Distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

System.Diagnostics.Debug.WriteLine($">Max dist 最大距离 : {max_dist}");

System.Diagnostics.Debug.WriteLine($">Min dist 最小距离 : {min_dist}");

// 存下匹配距离小于3*min_dist的点对

List<DMatch> good_matches = new List<DMatch>();

for (int i = 0; i < descriptors_object.Rows; i++)

{

if (matches[i].Distance < 3 * min_dist)

{

good_matches.Add(matches[i]);

}

}

// 绘制出匹配到的关键点

Mat img_matches = new Mat();

Cv2.DrawMatches(srcImage1, keypoints_object, srcImage2, keypoints_scene, good_matches, img_matches);

//定义两个局部变量

List<Point2f> obj = new List<Point2f>();

List<Point2f> scene = new List<Point2f>();

//从匹配成功的匹配对中获取关键点

for (int i = 0; i < good_matches.Count; i++)

{

obj.Add(keypoints_object[good_matches[i].QueryIdx].Pt);

scene.Add(keypoints_scene[good_matches[i].TrainIdx].Pt);

}

//计算透视变换

List<Point2d> objPts = obj.ConvertAll(Point2fToPoint2d);

List<Point2d> scenePts = scene.ConvertAll(Point2fToPoint2d);

Mat H = Cv2.FindHomography(objPts, scenePts, HomographyMethods.Ransac);

//从待测图片中获取角点

List<Point2f> obj_corners = new List<Point2f>();

obj_corners.Add(new Point(0, 0));

obj_corners.Add(new Point(srcImage1.Cols, 0));

obj_corners.Add(new Point(srcImage1.Cols, srcImage1.Rows));

obj_corners.Add(new Point(0, srcImage1.Rows));

//进行透视变换

Point2f[] scene_corners = Cv2.PerspectiveTransform(obj_corners, H);

//绘制出角点之间的直线

Point2f P0 = scene_corners[0] + new Point2f(srcImage1.Cols, 0);

Point2f P1 = scene_corners[1] + new Point2f(srcImage1.Cols, 0);

Point2f P2 = scene_corners[2] + new Point2f(srcImage1.Cols, 0);

Point2f P3 = scene_corners[3] + new Point2f(srcImage1.Cols, 0);

Cv2.Line(img_matches, new Point(P0.X, P0.Y), new Point(P1.X, P1.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P1.X, P1.Y), new Point(P2.X, P2.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P2.X, P2.Y), new Point(P3.X, P3.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P3.X, P3.Y), new Point(P0.X, P0.Y), new Scalar(255, 0, 123), 4);

//显示最终结果

Cv2.ImShow("SURF效果图", img_matches);

// Cv2.ImWrite("SURF.jpg", img_matches);

}

/// <summary>

/// SIFT

/// </summary>

/// <param name="sender"></param>

/// <param name="e"></param>

private static void SIFT()

{

// 载入源图片并显示

Mat srcImage1 = Cv2.ImRead("../../../images/book_box.jpg");

Mat srcImage2 = Cv2.ImRead("../../../images/book2.jpg");

// 定义一个特征检测类对象

KeyPoint[] keypoints_object, keypoints_scene;

Mat descriptors_object = new Mat();

Mat descriptors_scene = new Mat();

var MySift = OpenCvSharp.Features2D.SIFT.Create(400);

// 方法1:计算描述符(特征向量),将Detect和Compute操作分开

keypoints_object = MySift.Detect(srcImage1);

keypoints_scene = MySift.Detect(srcImage2);

MySift.Compute(srcImage1, ref keypoints_object, descriptors_object);

MySift.Compute(srcImage2, ref keypoints_scene, descriptors_scene);

// 方法2:计算描述符(特征向量),将Detect和Compute操作合并

//MySift.DetectAndCompute(srcImage1, null, out keypoints_object, descriptors_object);

//MySift.DetectAndCompute(srcImage2, null, out keypoints_scene, descriptors_scene);

// 创建基于FLANN的描述符匹配对象

FlannBasedMatcher matcher = new FlannBasedMatcher();

DMatch[] matches = matcher.Match(descriptors_object, descriptors_scene);

double max_dist = 0; double min_dist = 100;//最小距离和最大距离

// 计算出关键点之间距离的最大值和最小值

for (int i = 0; i < descriptors_object.Rows; i++)

{

double dist = matches[i].Distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

System.Diagnostics.Debug.WriteLine($">Max dist 最大距离 : {max_dist}");

System.Diagnostics.Debug.WriteLine($">Min dist 最小距离 : {min_dist}");

// 存下匹配距离小于3*min_dist的点对

List<DMatch> good_matches = new List<DMatch>();

for (int i = 0; i < descriptors_object.Rows; i++)

{

if (matches[i].Distance < 3 * min_dist)

{

good_matches.Add(matches[i]);

}

}

// 绘制出匹配到的关键点

Mat img_matches = new Mat();

Cv2.DrawMatches(srcImage1, keypoints_object, srcImage2, keypoints_scene, good_matches, img_matches);

//定义两个局部变量

List<Point2f> obj = new List<Point2f>();

List<Point2f> scene = new List<Point2f>();

//从匹配成功的匹配对中获取关键点

for (int i = 0; i < good_matches.Count; i++)

{

obj.Add(keypoints_object[good_matches[i].QueryIdx].Pt);

scene.Add(keypoints_scene[good_matches[i].TrainIdx].Pt);

}

//计算透视变换

List<Point2d> objPts = obj.ConvertAll(Point2fToPoint2d);

List<Point2d> scenePts = scene.ConvertAll(Point2fToPoint2d);

Mat H = Cv2.FindHomography(objPts, scenePts, HomographyMethods.Ransac);

//从待测图片中获取角点

List<Point2f> obj_corners = new List<Point2f>();

obj_corners.Add(new Point(0, 0));

obj_corners.Add(new Point(srcImage1.Cols, 0));

obj_corners.Add(new Point(srcImage1.Cols, srcImage1.Rows));

obj_corners.Add(new Point(0, srcImage1.Rows));

//进行透视变换

Point2f[] scene_corners = Cv2.PerspectiveTransform(obj_corners, H);

//绘制出角点之间的直线

Point2f P0 = scene_corners[0] + new Point2f(srcImage1.Cols, 0);

Point2f P1 = scene_corners[1] + new Point2f(srcImage1.Cols, 0);

Point2f P2 = scene_corners[2] + new Point2f(srcImage1.Cols, 0);

Point2f P3 = scene_corners[3] + new Point2f(srcImage1.Cols, 0);

Cv2.Line(img_matches, new Point(P0.X, P0.Y), new Point(P1.X, P1.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P1.X, P1.Y), new Point(P2.X, P2.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P2.X, P2.Y), new Point(P3.X, P3.Y), new Scalar(255, 0, 123), 4);

Cv2.Line(img_matches, new Point(P3.X, P3.Y), new Point(P0.X, P0.Y), new Scalar(255, 0, 123), 4);

//显示最终结果

Cv2.ImShow("SIFT效果图", img_matches);

// Cv2.ImWrite("SIFT.jpg", img_matches);

}

}

}

C++版本代码如下:

#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d.hpp>

#include <opencv2/features2d/features2d.hpp>

using namespace cv;

using namespace std;

int main()

{

//【1】载入原始图片

Mat srcImage1 = imread("../images/book_box.jpg");

Mat srcImage2 = imread("../images/book2.jpg");

if (!srcImage1.data || !srcImage2.data)

{

printf("读取图片错误,请确定目录下是否有imread函数指定的图片存在~! \n"); return false;

}

// 使用SIFT算子检测关键点

Ptr<SiftFeatureDetector> detector = SiftFeatureDetector::create(400);

// vector模板类,存放任意类型的动态数组

vector<KeyPoint> keypoints_object, keypoints_scene;

Mat descriptors_object, descriptors_scene;

// 调用detect函数检测出SURF特征关键点,保存在vector容器中

detector->detect(srcImage1, keypoints_object);

detector->detect(srcImage2, keypoints_scene);

// 计算描述符(特征向量)

detector->compute(srcImage1, keypoints_object, descriptors_object);

detector->compute(srcImage2, keypoints_scene, descriptors_scene);

// 使用FLANN匹配算子进行匹配

FlannBasedMatcher matcher;

vector<DMatch> matches;

matcher.match(descriptors_object, descriptors_scene, matches);

double max_dist = 0; double min_dist = 100;//最小距离和最大距离

// 计算出关键点之间距离的最大值和最小值

for (int i = 0; i < descriptors_object.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf(">Max dist 最大距离 : %f \n", max_dist);

printf(">Min dist 最小距离 : %f \n", min_dist);

// 存下匹配距离小于3*min_dist的点对

std::vector< DMatch > good_matches;

for (int i = 0; i < descriptors_object.rows; i++)

{

if (matches[i].distance < 3 * min_dist)

{

good_matches.push_back(matches[i]);

}

}

// 绘制出匹配到的关键点

Mat img_matches;

drawMatches(srcImage1, keypoints_object, srcImage2, keypoints_scene,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

// 定义两个局部变量

vector<Point2f> obj;

vector<Point2f> scene;

//从匹配成功的匹配对中获取关键点

for (unsigned int i = 0; i < good_matches.size(); i++)

{

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt);

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt);

}

Mat H = findHomography(obj, scene, RANSAC);//计算透视变换

//从待测图片中获取角点

vector<Point2f> obj_corners(4);

obj_corners[0] = Point(0, 0);

obj_corners[1] = Point(srcImage1.cols, 0);

obj_corners[2] = Point(srcImage1.cols, srcImage1.rows);

obj_corners[3] = Point(0, srcImage1.rows);

vector<Point2f> scene_corners(4);

//进行透视变换

perspectiveTransform(obj_corners, scene_corners, H);

//绘制出角点之间的直线

line(img_matches, scene_corners[0] + Point2f(static_cast<float>(srcImage1.cols), 0), scene_corners[1] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0, 123), 4);

line(img_matches, scene_corners[1] + Point2f(static_cast<float>(srcImage1.cols), 0), scene_corners[2] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0, 123), 4);

line(img_matches, scene_corners[2] + Point2f(static_cast<float>(srcImage1.cols), 0), scene_corners[3] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0, 123), 4);

line(img_matches, scene_corners[3] + Point2f(static_cast<float>(srcImage1.cols), 0), scene_corners[0] + Point2f(static_cast<float>(srcImage1.cols), 0), Scalar(255, 0, 123), 4);

//显示最终结果

imshow("效果图", img_matches);

waitKey(0);

return 0;

}

Python版本代码如下:

import os

import cv2

import numpy as np

# 读取图片和缩放图片

srcImage1 = cv2.imread('../images/book_box.jpg')

srcImage2 = cv2.imread('../images/book2.jpg')

# 创建SIFT

sift = cv2.SIFT_create(400)

# 计算特征点和描述点

(keypoints_object, descriptors_object) = sift.detectAndCompute(srcImage1, None)

(keypoints_scene, descriptors_scene) = sift.detectAndCompute(srcImage2, None)

# 使用FLANN匹配算子进行匹配

# matcher = cv2.BFMatcher() #建立匹配关系

# matches=matcher.match(descriptors_object,descriptors_scene) #匹配描述子

matcher = cv2.FlannBasedMatcher()

matches = matcher.match(descriptors_object, descriptors_scene)

# 最小距离和最大距离

max_dist = 0

min_dist = 100

for i in range(descriptors_object.shape[1]):

dist = matches[i].distance

if dist < min_dist:

min_dist = dist

if dist > max_dist:

max_dist = dist

print(">Max dist 最大距离 :", max_dist)

print(">Min dist 最小距离 :", min_dist)

good_matches = []

for i in range(descriptors_object.shape[1]):

if matches[i].distance < 3 * min_dist:

good_matches.append(matches[i])

# 绘制出匹配到的关键点

img_matches = cv2.drawMatches(srcImage1, keypoints_object, srcImage2, keypoints_scene, good_matches, None)

# 当匹配项大于4时

if len(good_matches) >= 4:

# 查找单应性矩阵

# 转换为n行的元素,每一行一个元素,并且这个元素由两个值组成

obj = np.float32([keypoints_object[m.queryIdx].pt for m in good_matches]).reshape(-1, 1, 2)

scene = np.float32([keypoints_scene[m.trainIdx].pt for m in good_matches]).reshape(-1, 1, 2)

# 获取单应性矩阵

H, _ = cv2.findHomography(obj, scene, cv2.RANSAC)

# 要搜索的图的四个角点

h, w = srcImage1.shape[0:2]

obj_corners = np.float32([[0, 0], [w, 0], [w, h], [0, h]]).reshape(-1, 1, 2)

scene_corners = cv2.perspectiveTransform(obj_corners, H)

scene_corners[0][0][0] += w

scene_corners[1][0][0] += w

scene_corners[2][0][0] += w

scene_corners[3][0][0] += w

# 绘制多边形

cv2.polylines(img_matches, pts=[np.int32(scene_corners)], isClosed=True, color=(255, 0, 123), thickness=4)

cv2.imshow("img_matches", img_matches)

cv2.waitKey(0)

补充:

下面这段代码是 OpenCV-Python-Tutorial-中文版.pdf (P218)中的实现,效果和毛星云的一样,这里放出来,给大伙参考下。

运行效果:

源码如下:

import numpy as np

import cv2

from matplotlib import pyplot as plt

MIN_MATCH_COUNT = 10

img1 = cv2.imread('../images/book_box.jpg', 0) # queryImage

img2 = cv2.imread('../images/book2.jpg', 0) # trainImage

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

# store all the good matches as per Lowe's ratio test.

good = []

for m, n in matches:

if m.distance < 0.7 * n.distance:

good.append(m)

'''

现在我们设置只有存在10个以上匹配时才去查找目标( MIN_ MATCH_ COUNT=10 ),否则显示警告消息:“现在匹配不足!"

如果找到了足够的匹配,我们要提取两幅图像中匹配点的坐标。

把它们传入到函数中计算透视变换。

-旦我们找到3x3的变换矩阵,就可以使用它将查询图像的四个顶点(四个角)变换到目标图像中去了。

然后再绘制出来。

'''

if len(good) > MIN_MATCH_COUNT:

# 获取关 点的坐标

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2)

# 第三个参数 Method used to computed a homography matrix. The following methods are possible: #0 - a regular method using all the points

# CV_RANSAC - RANSAC-based robust method

# CV_LMEDS - Least-Median robust method

# 第四个参数取值范围在 1 到 10 绝一个点对的 值。原图像的点经 变换后点与目标图像上对应点的 差 # 差就 为是 outlier

# 回值中 M 为变换矩 。

M, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

matchesMask = mask.ravel().tolist()

# 获得原图像的高和宽

h, w = img1.shape

# 使用得到的变换矩 对原图像的四个 变换 获得在目标图像上对应的坐标

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

# 原图像为灰度图

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

else:

print("Not enough matches are found - %d/%d" % (len(good), MIN_MATCH_COUNT))

matchesMask = None

# 最后我再绘制 inliers 如果能成功的找到目标图像的话 或者匹配的关 点 如果失败。

draw_params = dict(matchColor=(0, 255, 0), # draw matches in green color

singlePointColor=None,

matchesMask=matchesMask, # draw only inliers

flags=2)

img3 = cv2.drawMatches(img1, kp1, img2, kp2, good, None, **draw_params)

plt.imshow(img3, 'gray'), plt.show()

# 复杂图像中被找到的目标图像被标记成白色