使用FLANN进行特征点匹配

视频讲解如下:

在本章节中给大家演示使用FLANN进行特征点匹配,主要函数:SURF和SIFT。

毛星云的代码为opencv-2.4.9版本的SURF功能演示,可是博主在opencv-2.4.9版本中并未发现SURF功能,目前只能演示SIFT了。

C#、C++版本中提供了Detect和DetectAndCompute两种算法,效果其实也都差不多,博主代码中两种操作方法都提供了,Python版本中好像好像无法使用Detect,执行后返回结果是空的。。。

当前系列所有demo下载地址:

https://github.com/GaoRenBao/OpenCv4-Demo

不同编程语言对应的OpenCv版本以及开发环境信息如下:

语言 | OpenCv版本 | IDE | 包含方法 |

C# | OpenCvSharp4.4.8.0.20230708 | Visual Studio 2022 | SURF和SIFT |

C++ | OpenCv-4.5.5-vc14_vc15 | Visual Studio 2022 | SIFT |

Python | OpenCv-Python (4.6.0.66) | PyCharm Community Edition 2022.1.3 | SIFT |

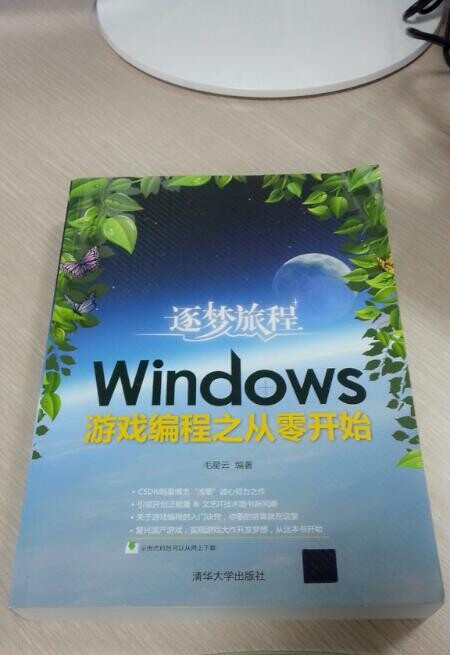

首先呢,我们需要准备两张测试图片。(不想下载工程的童鞋,可直接复制下面的图片和代码)

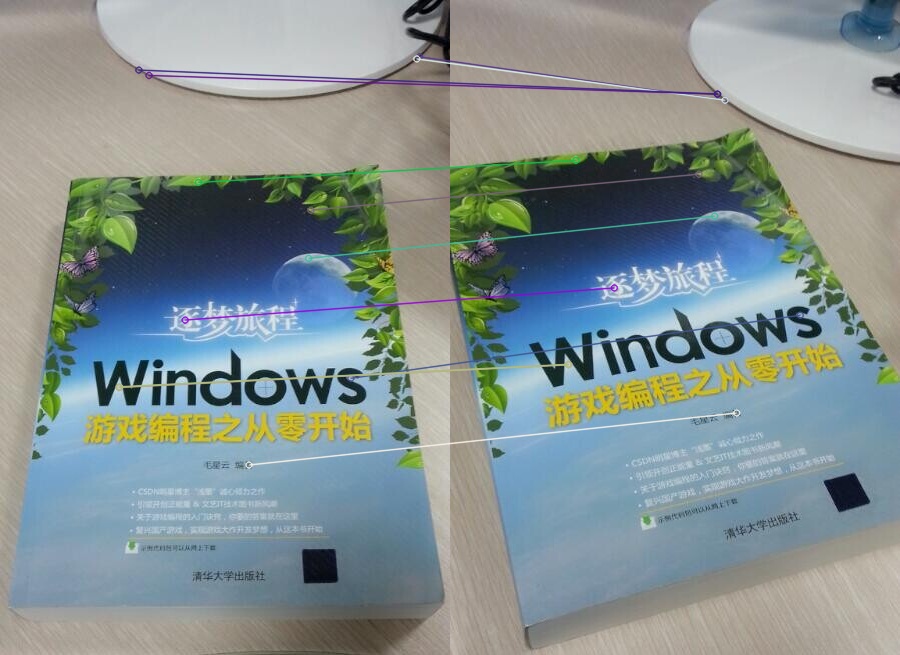

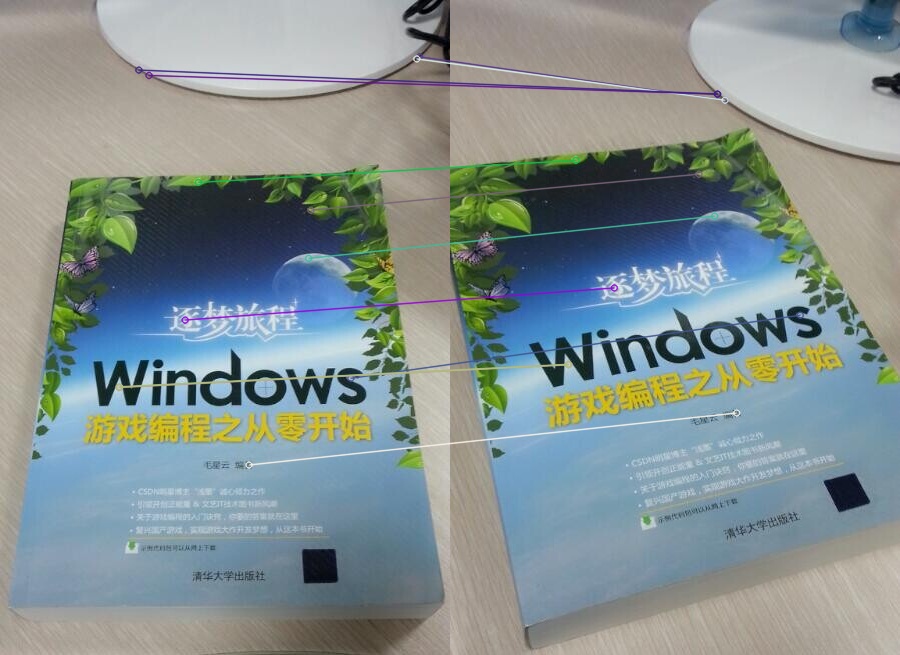

C++ SIFT版本运行效果如下(其他版本效果也差不多):

C#版本代码如下:

using OpenCvSharp;

using System.Collections.Generic;

namespace demo

{

internal class Program

{

static void Main(string[] args)

{

demo1();

demo2();

Cv2.WaitKey();

}

/// <summary>

/// SURF

/// </summary>

static void demo1()

{

// 载入源图片并显示

Mat img_1 = Cv2.ImRead("../../../images/book2.jpg");

Mat img_2 = Cv2.ImRead("../../../images/book3.jpg");

// 定义一个特征检测类对象

var MySurf = OpenCvSharp.XFeatures2D.SURF.Create(300);

// 模板类是能够存放任意类型的动态数组,能够增加和压缩数据

KeyPoint[] keypoints_1 = MySurf.Detect(img_1);

KeyPoint[] keypoints_2 = MySurf.Detect(img_2);

Mat descriptors_1 = new Mat();

Mat descriptors_2 = new Mat();

// 方法1:计算描述符(特征向量)

//MySurf.Compute(img_1, ref keypoints_1, descriptors_1);

//MySurf.Compute(img_2, ref keypoints_2, descriptors_2);

// 方法2:计算描述符(特征向量)

MySurf.DetectAndCompute(img_1, null, out keypoints_1, descriptors_1);

MySurf.DetectAndCompute(img_2, null, out keypoints_2, descriptors_2);

// 采用FLANN算法匹配描述符向量

FlannBasedMatcher matcher = new FlannBasedMatcher();

DMatch[] matches = matcher.Match(descriptors_1, descriptors_2);

double max_dist = 0; double min_dist = 100;

// 快速计算关键点之间的最大和最小距离

for (int i = 0; i < descriptors_1.Rows; i++)

{

double dist = matches[i].Distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

//输出距离信息

System.Diagnostics.Debug.WriteLine($"最大距离(Max dist) : {max_dist}");

System.Diagnostics.Debug.WriteLine($"最小距离(Min dist) : {min_dist}");

//存下符合条件的匹配结果(即其距离小于2* min_dist的),使用radiusMatch同样可行

List<DMatch> good_matches = new List<DMatch>();

for (int i = 0; i < descriptors_1.Rows; i++)

{

if (matches[i].Distance < 2 * min_dist)

good_matches.Add(matches[i]);

}

//绘制匹配点并显示窗口

Mat img_matches = new Mat();

Cv2.DrawMatches(img_1, keypoints_1, img_2, keypoints_2, good_matches, img_matches);

// 显示效果图

Cv2.ImShow("SURF匹配效果图", img_matches);

}

/// <summary>

/// SIFT

/// </summary>

static void demo2()

{

// 载入源图片并显示

Mat img_1 = Cv2.ImRead("../../../images/book2.jpg");

Mat img_2 = Cv2.ImRead("../../../images/book3.jpg");

// 定义一个特征检测类对象

var MySift = OpenCvSharp.Features2D.SIFT.Create(300);

// 模板类是能够存放任意类型的动态数组,能够增加和压缩数据

KeyPoint[] keypoints_1 = MySift.Detect(img_1);

KeyPoint[] keypoints_2 = MySift.Detect(img_2);

Mat descriptors_1 = new Mat();

Mat descriptors_2 = new Mat();

// 方法1:计算描述符(特征向量)

//MySift.Compute(img_1, ref keypoints_1, descriptors_1);

//MySift.Compute(img_2, ref keypoints_2, descriptors_2);

// 方法2:计算描述符(特征向量)

MySift.DetectAndCompute(img_1, null, out keypoints_1, descriptors_1);

MySift.DetectAndCompute(img_2, null, out keypoints_2, descriptors_2);

// 采用FLANN算法匹配描述符向量

FlannBasedMatcher matcher = new FlannBasedMatcher();

DMatch[] matches = matcher.Match(descriptors_1, descriptors_2);

double max_dist = 0; double min_dist = 100;

// 快速计算关键点之间的最大和最小距离

for (int i = 0; i < descriptors_1.Rows; i++)

{

double dist = matches[i].Distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

//输出距离信息

System.Diagnostics.Debug.WriteLine($"最大距离(Max dist) : {max_dist}");

System.Diagnostics.Debug.WriteLine($"最小距离(Min dist) : {min_dist}");

//存下符合条件的匹配结果(即其距离小于2* min_dist的),使用radiusMatch同样可行

List<DMatch> good_matches = new List<DMatch>();

for (int i = 0; i < descriptors_1.Rows; i++)

{

if (matches[i].Distance < 2 * min_dist)

good_matches.Add(matches[i]);

}

//绘制匹配点并显示窗口

Mat img_matches = new Mat();

Cv2.DrawMatches(img_1, keypoints_1, img_2, keypoints_2, good_matches, img_matches);

// 显示效果图

Cv2.ImShow("SIFT匹配效果图", img_matches);

}

}

}

C++版本代码如下:

#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d.hpp>

#include <opencv2/features2d/features2d.hpp>

using namespace cv;

using namespace std;

int main()

{

//【1】载入源图片

Mat img_1 = imread("../images/book2.jpg", 1);

Mat img_2 = imread("../images/book3.jpg", 1);

if (!img_1.data || !img_2.data) { printf("读取图片image0错误~! \n"); return false; }

//【2】利用SIFT检测器检测的关键点

Ptr<SIFT> siftDetector = SIFT::create(300);

Mat descriptors_1, descriptors_2;

vector<KeyPoint> keypoints_1, keypoints_2;

// 方法1:计算描述符(特征向量),将Detect和Compute操作分开

//siftDetector->detect(img_1, keypoints_1);

//siftDetector->detect(img_2, keypoints_2);

//siftDetector->compute(img_1, keypoints_1, descriptors_1);

//siftDetector->compute(img_2, keypoints_2, descriptors_2);

// 方法2:计算描述符(特征向量),将Detect和Compute操作合并

siftDetector->detectAndCompute(img_1, cv::Mat(), keypoints_1, descriptors_1);

siftDetector->detectAndCompute(img_2, cv::Mat(), keypoints_2, descriptors_2);

//【4】采用FLANN算法匹配描述符向量

FlannBasedMatcher matcher;

vector<DMatch> matches;

matcher.match(descriptors_1, descriptors_2, matches);

double max_dist = 0; double min_dist = 100;

//【5】快速计算关键点之间的最大和最小距离

for (int i = 0; i < descriptors_1.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

//输出距离信息

printf("> 最大距离(Max dist) : %f \n", max_dist);

printf("> 最小距离(Min dist) : %f \n", min_dist);

//【6】存下符合条件的匹配结果(即其距离小于2* min_dist的),使用radiusMatch同样可行

std::vector< DMatch > good_matches;

for (int i = 0; i < descriptors_1.rows; i++)

{

if (matches[i].distance < 2 * min_dist)

{

good_matches.push_back(matches[i]);

}

}

//【7】绘制出符合条件的匹配点

Mat img_matches;

drawMatches(img_1, keypoints_1, img_2, keypoints_2,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

//【8】输出相关匹配点信息

for (int i = 0; i < good_matches.size(); i++)

{

printf(">符合条件的匹配点 [%d] 特征点1: %d -- 特征点2: %d \n", i, good_matches[i].queryIdx, good_matches[i].trainIdx);

}

//【9】显示效果图

imshow("匹配效果图", img_matches);

//按任意键退出程序

waitKey(0);

return 0;

}

Python版本代码如下:

import cv2

import numpy as np

# 【1】载入图像

img_1 = cv2.imread("../images/book2.jpg")

img_2 = cv2.imread("../images/book3.jpg")

# 定义一个特征检测类对象

sift = cv2.SIFT_create(300)

# 方法1:计算描述符(特征向量),将Detect和Compute操作分开

#keypoints_1 = sift.detect(img_1)

#keypoints_2 = sift.detect(img_2)

#(keypoints1, descriptors_1) = sift.compute(img_1, keypoints_1)

#(keypoints2, descriptors_2) = sift.compute(img_2, keypoints_2)

# 方法2:计算描述符(特征向量),将Detect和Compute操作合并

(keypoints_1, descriptors_1) = sift.detectAndCompute(img_1, None)

(keypoints_2, descriptors_2) = sift.detectAndCompute(img_2, None)

matcher = cv2.BFMatcher() #建立匹配关系

matches=matcher.match(descriptors_1,descriptors_2) #匹配描述子

matches=sorted(matches,key=lambda x:x.distance) #据距离来排序

max_dist = 0

min_dist = 100

for i in range(descriptors_1.shape[1]):

dist = matches[i].distance

if dist < min_dist:

min_dist = dist;

if dist > max_dist:

max_dist = dist;

# 存下符合条件的匹配结果(即其距离小于2* min_dist的),使用radiusMatch同样可行

good_matches = []

for i in range(descriptors_1.shape[1]):

if matches[i].distance < 2 * min_dist:

good_matches.append(matches[i])

#画出匹配关系

img_matches = cv2.drawMatches(img_1, keypoints_1, img_2, keypoints_2, good_matches, None)

cv2.imshow("img_matches", img_matches)

cv2.waitKey(0)

cv2.destroyAllWindows()