YOLOx 自定义数据集训练方法1(VOC)

自定义数据集同样采用Labelme进行标注。

安装方法,请参考《Labelme安装与运行》

标注方法,请参考《YOLOv5 自定义数据集训练方法》

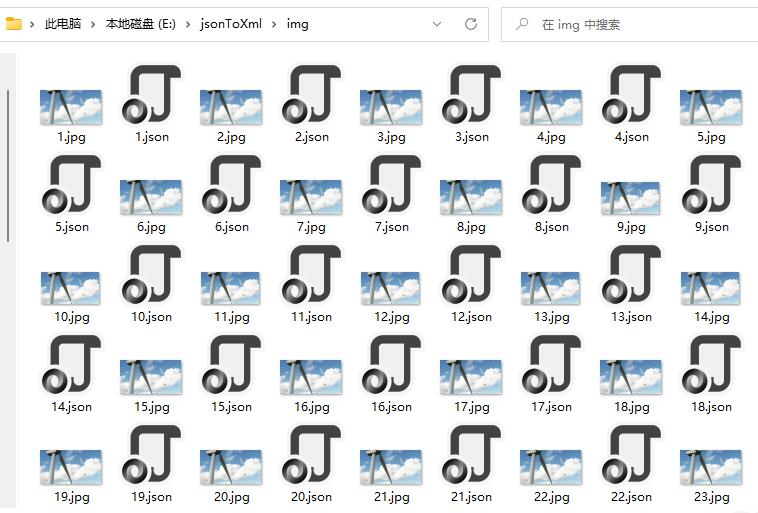

标注完之后,Labelme会生成一堆json的文件,json文件转yolox的xml文件的代码如下:

# -*- coding: utf-8 -*-

import os

import numpy as np

import codecs

import json

import glob

import cv2

import shutil

from sklearn.model_selection import train_test_split

# 1.标签路径

labelme_path = "E:/jsonToXml/img/" # 原始labelme标注数据路径(存放了图片和json文件)

saved_path = "E:/jsonToXml/out/" # 保存路径

# 2.创建要求文件夹

dst_annotation_dir = os.path.join(saved_path, 'Annotations')

if not os.path.exists(dst_annotation_dir):

os.makedirs(dst_annotation_dir)

dst_image_dir = os.path.join(saved_path, "JPEGImages")

if not os.path.exists(dst_image_dir):

os.makedirs(dst_image_dir)

dst_main_dir = os.path.join(saved_path, "ImageSets", "Main")

if not os.path.exists(dst_main_dir):

os.makedirs(dst_main_dir)

# 3.获取待处理文件

org_json_files = sorted(glob.glob(os.path.join(labelme_path, '*.json')))

org_json_file_names = [i.split("\\")[-1].split(".json")[0] for i in org_json_files]

org_img_files = sorted(glob.glob(os.path.join(labelme_path, '*.jpg')))

org_img_file_names = [i.split("\\")[-1].split(".jpg")[0] for i in org_img_files]

# 4.labelme file to voc dataset

for i, json_file_ in enumerate(org_json_files):

json_file = json.load(open(json_file_, "r", encoding="utf-8"))

image_path = os.path.join(labelme_path, org_json_file_names[i]+'.jpg')

print(image_path)

img = cv2.imread(image_path)

height, width, channels = img.shape

dst_image_path = os.path.join(dst_image_dir, "{:06d}.jpg".format(i))

cv2.imwrite(dst_image_path, img)

dst_annotation_path = os.path.join(dst_annotation_dir, '{:06d}.xml'.format(i))

with codecs.open(dst_annotation_path, "w", "utf-8") as xml:

xml.write('<annotation>\n')

xml.write('\t<folder>' + 'VOC2007' + '</folder>\n')

xml.write('\t<filename>' + "{:06d}.jpg".format(i) + '</filename>\n')

xml.write('\t<source>\n')

xml.write('\t\t<database>The VOC2007 Database</database>\n')

xml.write('\t\t<annotation>PASCAL VOC2007</annotation>\n')

xml.write('\t\t<image>flickr</image>\n')

xml.write('\t\t<flickrid>NULL</flickrid>\n')

xml.write('\t</source>\n')

xml.write('\t<owner>\n')

xml.write('\t\t<flickrid>NULL</flickrid>\n')

xml.write('\t\t<name>Dale Peeples</name>\n')

xml.write('\t</owner>\n')

xml.write('\t<size>\n')

xml.write('\t\t<width>' + str(width) + '</width>\n')

xml.write('\t\t<height>' + str(height) + '</height>\n')

xml.write('\t\t<depth>' + str(channels) + '</depth>\n')

xml.write('\t</size>\n')

xml.write('\t\t<segmented>0</segmented>\n')

for multi in json_file["shapes"]:

points = np.array(multi["points"])

# 图片的像素中不存在小数, 强制int

xmin = int(min(points[:, 0]))

xmax = int(max(points[:, 0]))

ymin = int(min(points[:, 1]))

ymax = int(max(points[:, 1]))

label = multi["label"]

if xmax <= xmin:

pass

elif ymax <= ymin:

pass

else:

xml.write('\t<object>\n')

xml.write('\t\t<name>' + label + '</name>\n')

xml.write('\t\t<pose>Unspecified</pose>\n')

xml.write('\t\t<truncated>1</truncated>\n')

xml.write('\t\t<difficult>0</difficult>\n')

xml.write('\t\t<bndbox>\n')

xml.write('\t\t\t<xmin>' + str(xmin) + '</xmin>\n')

xml.write('\t\t\t<ymin>' + str(ymin) + '</ymin>\n')

xml.write('\t\t\t<xmax>' + str(xmax) + '</xmax>\n')

xml.write('\t\t\t<ymax>' + str(ymax) + '</ymax>\n')

xml.write('\t\t</bndbox>\n')

xml.write('\t</object>\n')

print(json_file_, xmin, ymin, xmax, ymax, label)

xml.write('</annotation>')

# 5.split files for txt

train_file = os.path.join(dst_main_dir, 'train.txt')

trainval_file = os.path.join(dst_main_dir, 'trainval.txt')

val_file = os.path.join(dst_main_dir, 'val.txt')

test_file = os.path.join(dst_main_dir, 'test.txt')

ftrain = open(train_file, 'w')

ftrainval = open(trainval_file, 'w')

fval = open(val_file, 'w')

ftest = open(test_file, 'w')

total_annotation_files = glob.glob(os.path.join(dst_annotation_dir, "*.xml"))

total_annotation_names = [i.split("\\")[-1].split(".xml")[0] for i in total_annotation_files]

# test_filepath = ""

for file in total_annotation_names:

ftrainval.writelines(file + '\n')

# test

# for file in os.listdir(test_filepath):

# ftest.write(file.split(".jpg")[0] + "\n")

# split

train_files, val_files = train_test_split(total_annotation_names, test_size=0.2)

# train

for file in train_files:

ftrain.write(file + '\n')

# val

for file in val_files:

fval.write(file + '\n')

ftrainval.close()

ftrain.close()

fval.close()

# ftest.close()

转换程序需要安装scikit-learn软件包,安装命令如下。

pip install scikit-learn -i https://mirror.baidu.com/pypi/simple

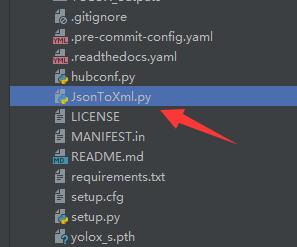

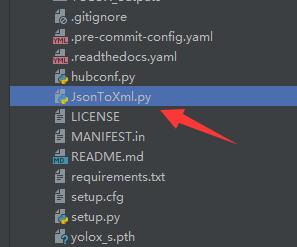

博主这里为了方便,我直接将该代码写在yolox的工程目录下,并在yolox的虚拟环境中安装scikit-learn软件包。

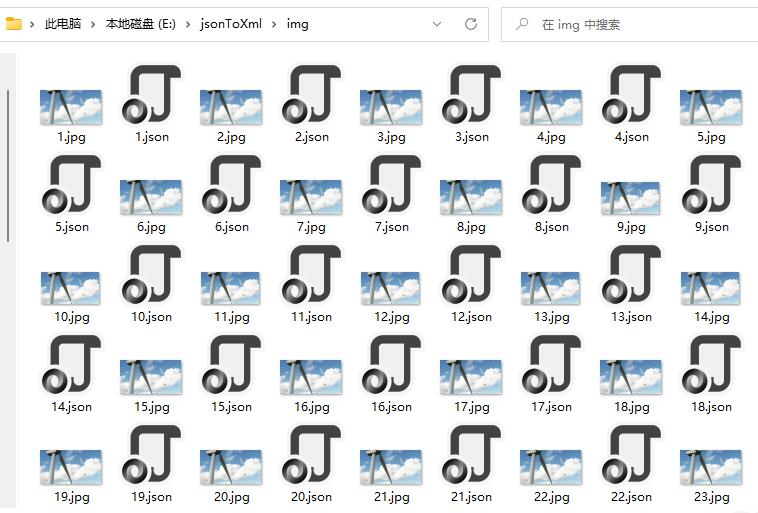

将jpg图片和json文件放在同一个目录下面,注意,不要放在中文路径下,不然会出错。

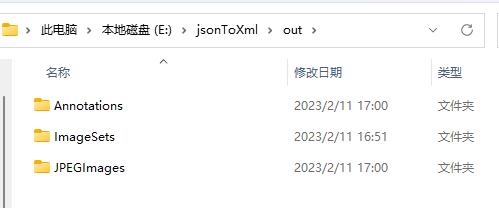

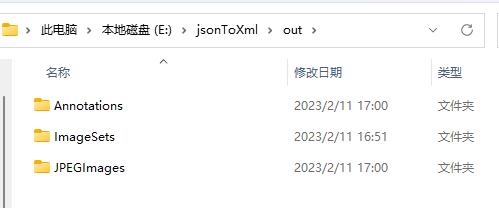

然后执行转换程序,程序会自动生成yolox需要的相关文件夹。

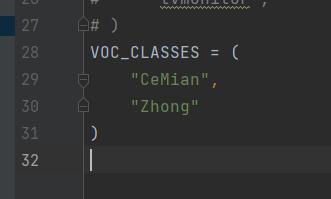

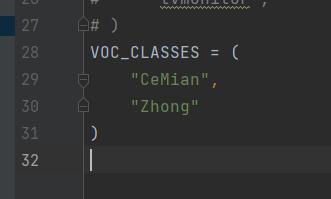

然后再利用前面章节的方法进行训练(记得修改样本种类,博主这里就两个种类)

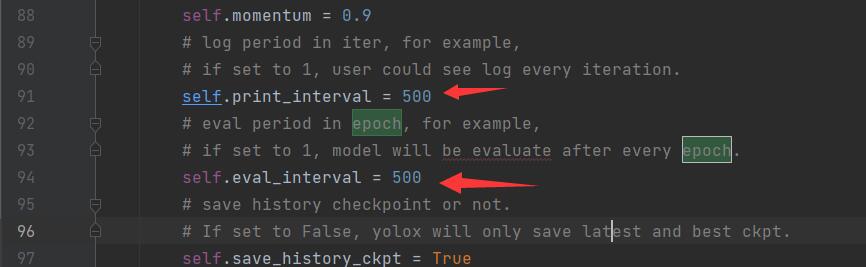

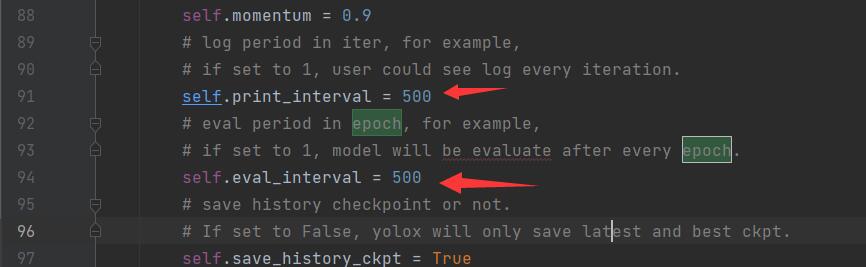

注意:如果想减少评估次数,可以将“YOLOX-main\yolox\exp\yolox_base.py”文件中下面这两个值调大,默认是10。

我样本图片有499张,所以我这边直接改成500。

训练完成之后,根据前面的方法,进行测试,最终识别结果如下:

注意:训练结果如果没有best_ckpt.pth文件,可以采用latest_ckpt.pth代替。