Jetson Nano 语音输入与ASR

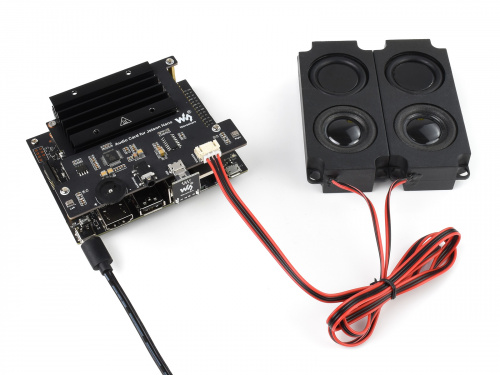

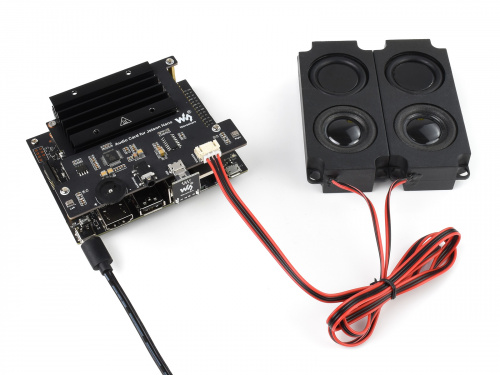

声卡模块资料:Jetson Nano声卡使用

硬件连接如下:

说明:

1、采集声道数channels的值必须是双通道,不支持单通道。

2、rapid-paraformer ASR模型下载地址与使用方法:https://pypi.org/project/rapid-paraformer/2.0.4/

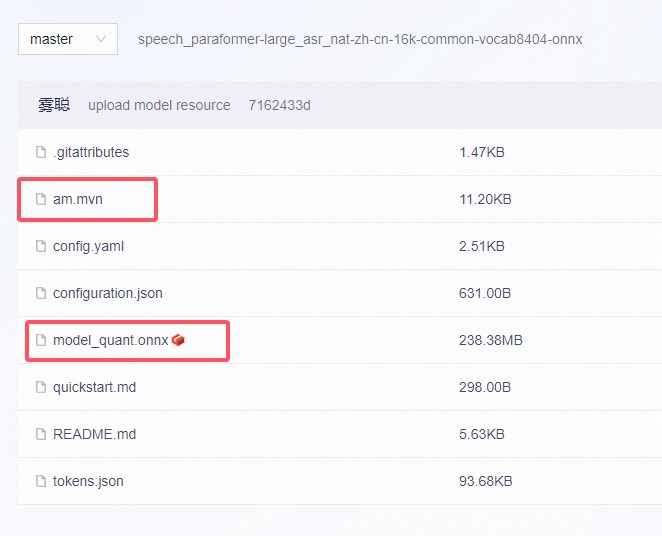

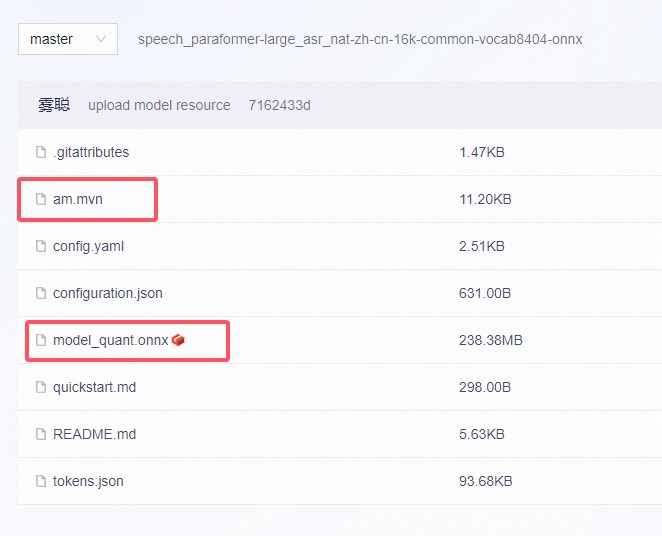

3、魔塔社区ASR模型:speech_paraformer-large_asr_nat-zh-cn-16k-common-vocab8404-onnx

将下载的“model_quant.onnx”模型文件名,改成 rapid_paraformer 支持的“asr_paraformerv2.onnx”名字,并替换到models目录下即可。

4、缺点:rapid_paraformer没找到支持热词识别的操作

完整测试代码如下:

import pyaudio

import numpy as np

import wave

import os

import time

from rapid_paraformer import RapidParaformer

from datetime import datetime

FORMAT = pyaudio.paInt16

CHANNELS = 2 # 只支持双通道

RATE = 16000

CHUNK_SIZE = 1024

MAX_WAV = int(RATE / CHUNK_SIZE * 0.5) # 0.5秒超时

# 获取当前时间

def getData():

now = datetime.now()

return f"{now.year}-{now.month}-{now.day} {now.hour}:{now.minute}:{now.second}"

# ASR

def wavTotext(path, paraformer):

print(getData())

result = paraformer([path])

print(getData())

print(result)

# 保存文件

def record_to_file(path, data):

wf = wave.open(path, 'wb')

wf.setnchannels(CHANNELS)

wf.setsampwidth(2)

wf.setframerate(RATE)

wf.writeframes(data)

wf.close()

if __name__ == '__main__':

config_path = "resources/config.yaml"

paraformer = RapidParaformer(config_path)

while True:

print(f"CHUNK_SIZE:{CHUNK_SIZE}")

got_a_sentence = False

leave = False

pa = pyaudio.PyAudio()

stream = pa.open(format=FORMAT,

channels=CHANNELS,

rate=RATE,

input=True,

start=False,

input_device_index=0,

frames_per_buffer=CHUNK_SIZE)

while not leave:

ReadStart = False # 标志位,用于表示是否检测到语音

ReadCount = 0

voiced_frames = []

print("* recording: ")

stream.start_stream()

StartTime = time.time()

while not got_a_sentence and not leave:

chunk = stream.read(CHUNK_SIZE, exception_on_overflow=False)

# 计算音频能量

audio_data = np.frombuffer(chunk, dtype=np.int16)

energy = np.sum(np.abs(audio_data)) / len(audio_data)

if energy > 200:

ReadCount = 0

if not ReadStart:

print("检测到语音开始")

StartTime = time.time()

ReadStart = True

elif ReadStart:

ReadCount += 1

if ReadStart:

voiced_frames.append(audio_data) # 将读取到的音频数据添加到列表中

if (ReadStart and ReadCount > MAX_WAV) or (time.time() - StartTime) > 10:

StartTime = time.time()

ReadCount = 0

size = len(voiced_frames)

ReadStart = False

if size > 11:

got_a_sentence = True

print("检测到语音结束", len(voiced_frames))

else:

voiced_frames = []

stream.stop_stream()

print("* done recording")

got_a_sentence = False

data = b''.join(voiced_frames)

record_to_file("record.wav", data)

leave = True

stream.close()

# os.system("aplay -D hw:0,0 record.wav")

wavTotext("record.wav", paraformer)

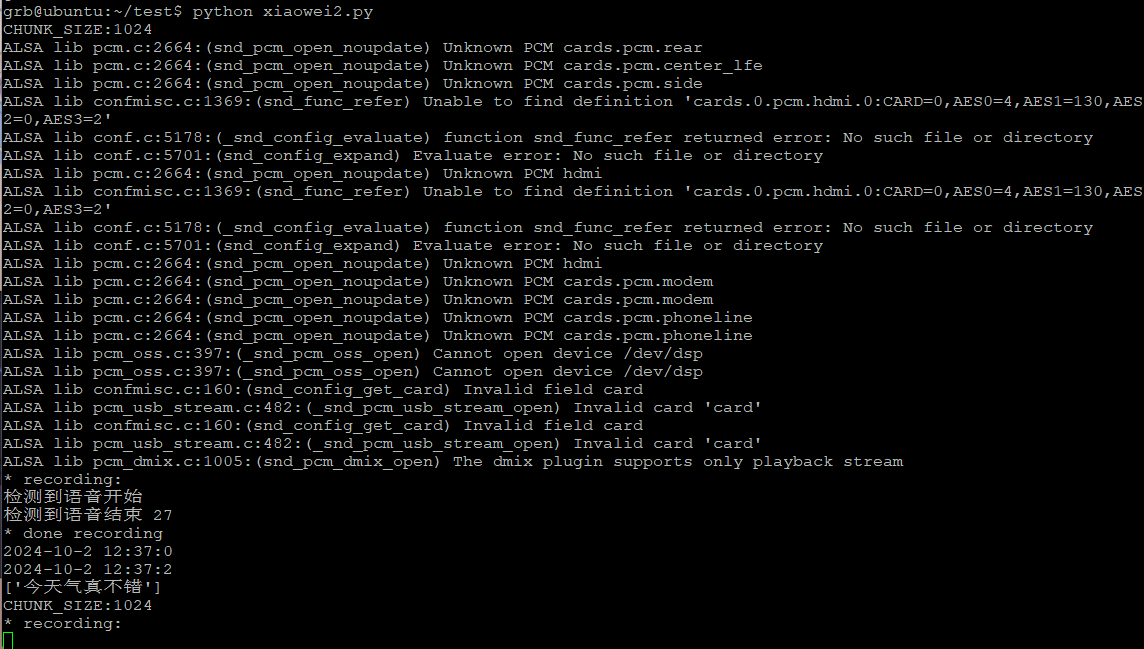

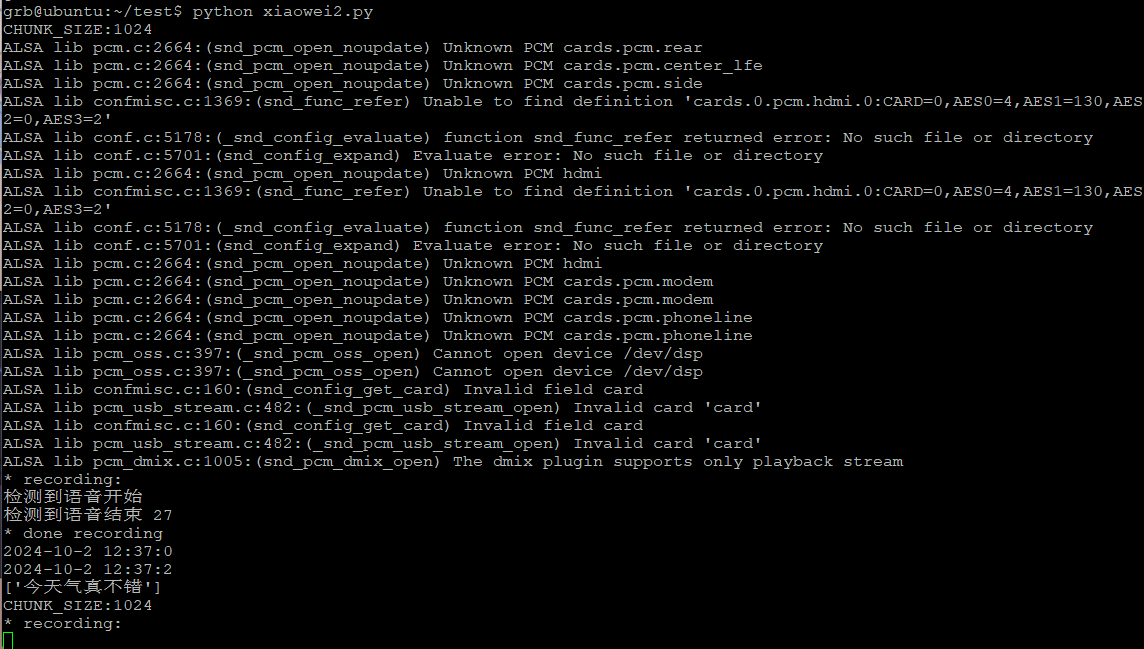

运行效果如下,只要对着麦克风说话就行,一句话的转换时间在2秒左右: